Weak Supervision

Some intuition

- Weak supervision: The usage of high-level or noisy data sources as a form of input into ML models

- The goal is to generate large datasets more rapidly than is capable through manual annotation

- Useful for when problems can be solved via lots of imperfect labels rather than small amounts of perfect labels

- Analysing when, how, and where different labelling functions agree or disagree with eah other to determine when and how much to trust them

- Data sources range from high to low quality

- Example domains: Time series analysis, video and image classification, text and document classification

What weak supervision isn’t

Rule-based classifiers: Use rules to perform classification, not just used as labelling functions

Cannot generalise outside of the rules defined

Weak supervision only uses labelling functions to generate labels and then uses ML to classify

Semi-supervised learning (SSL) - Using a small amount of labelled data and lots of unlabelled data for training

Propagates knowledge based on what is already labelled to label more

Weak supervision allows for knowledge to be injected by what you know to label more

SSL is akin to smoothing the edges, whereas weak supervision discovers and addresses new uses

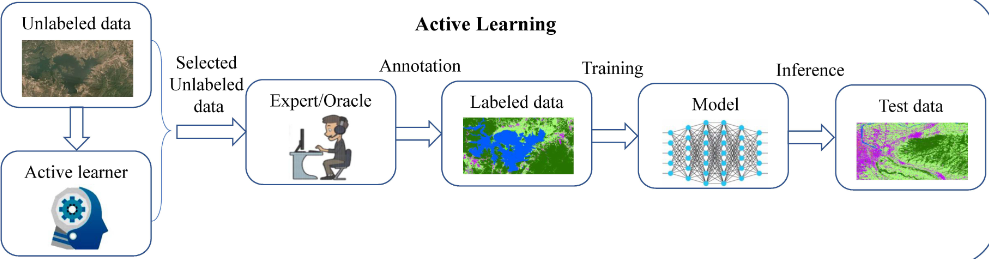

The case of active learning

Active learning can be used as a form of SSL

Learner machine iteratively selects data it is most uncertain about / are the most informative

Human oracle labels this smaller subset of data, reducing overall amount of data to be manually labelled

May introduce bias to the data that the machine learns from

The case of active learning

Active learning

- Includes labels generated by expensive humans, often SMEs

- Labels assumed to be accurate and single-sourced

- More expensive labelling process

- Iterative process, labelling done in a human-in-the-loop fashion until satisfied

Weak supervision

- Label sources are flexible and often include more than one

- Data often inaccurate and incomplete

- Can label up to millions of data points automatically

- Weak supervision models trained fully when all labels gathered

WeakAL is a new research domain that combines the two

Let’s have a go at it!

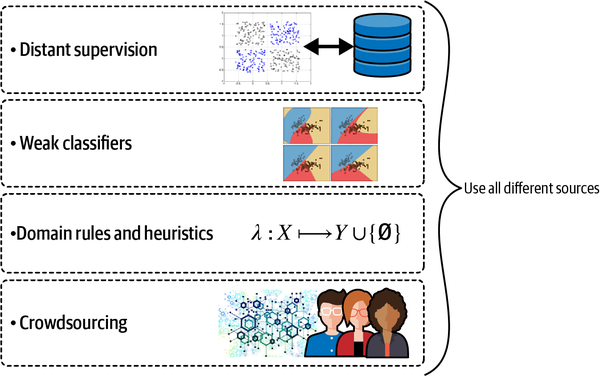

- Crowdsourcing

- Automatic labelling functions

- Mixed level of expert annotation

Data Programming

A novel paradigm for programmatically creating large labelled training sets using weak supervision, shifting the focus from feature engineering to data set creation

- Allows users to express domain heuristics, weak supervision sources, or external knowledge as labelling functions

- Noisy and potentially conflicting programs that label subsets of the data

- Distinct from crowdsourcing and distant supervision

Authors propose a generative model to label the data in which the accuracies and correlations of the labelling functions are learned to de-noise labels, achieving comparable performance to supervised methods

- Noise-aware discriminative loss function able to account for the noise in labels, enabling logistic regression and LSTM model training

- Effective with hand-crafted features and features generated automatically from LSTMs

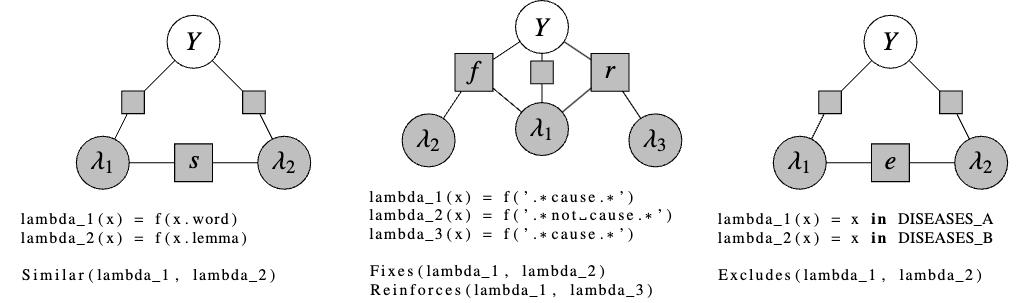

Data programming continued

Even in their simple application of a binary classification task, a complex set of dependencies can be modelling between the labelling functions

User-defined dependency graphs can be used to help model relationships, but this can become unwieldy

Data programming performance relies on the quality of labelling functions and the specific task and nature of the weak supervision sources involved

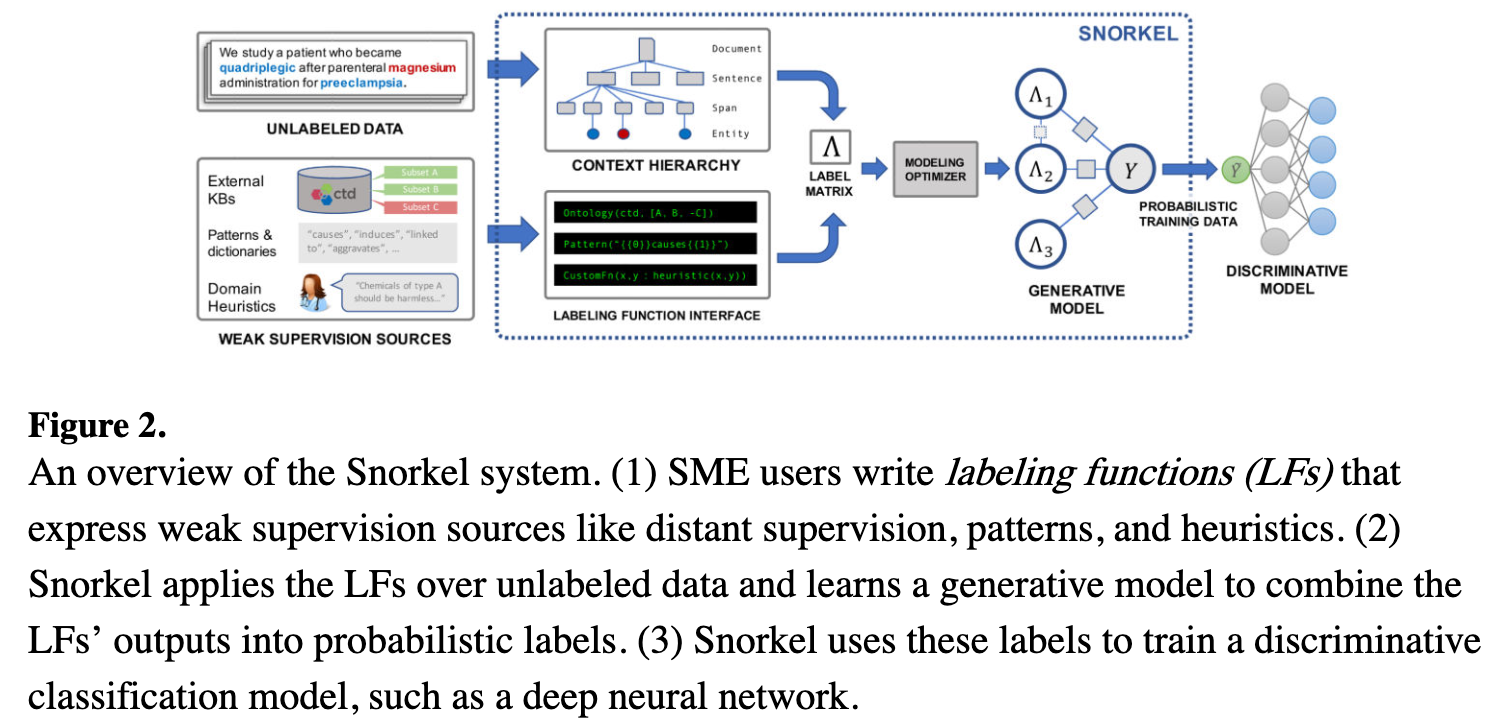

Snorkel AI

- First system to employ data programming, allowing users to write labelling functions which are noisy and potentially conflicting

- Denoises labels using a generative model that trains a discriminative model (DNN) on probabilistic labels

- Key innovation is the combining of multiple weak supervision sources with no ground truth

Snorkel AI continued

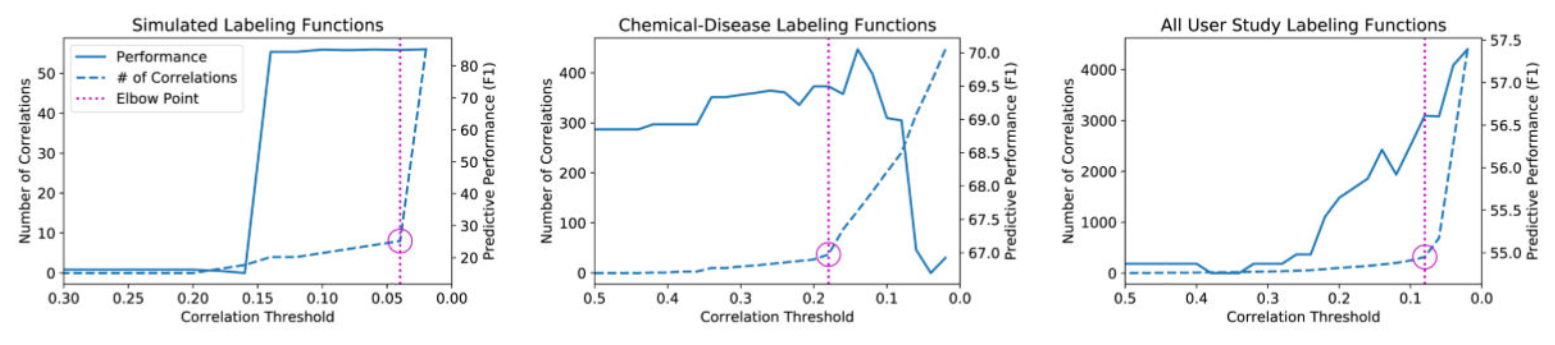

There is a trade-off space between accuracy and computational cost - How do we choose an effective modelling strategy?

Can use a heuristic that considers label density, taking expected counts of instances in which a weighted MV could flip the incorrect predictions of unweighted MV under best case - modelling advantage

Hard to define a GM that can account for modelling dependencies between labelling functions

Can use a psuedolike estimator to compute the objective gradient, requiring a threshold parameter to trade-off between predictive performance and computing cost - hit an elbow point on a graph to estimate

Learning from Crowds

Learning with multiple noisy labels gathered from annotators, often with substantial disagreement

Propose a probabilistic framework that jointly learns:

- A classifier / regressor

- The accuracy of each annotator

- The actual hidden true labels

Binary classification problem in which each annotator’s performance is modelled by their sensitivity and specificity (as seen in a mixing matrix)

Expectation Maximisation (EM) algorithm is used to iteratively estimate the true labels, annotator performance, and classifier parameters

Bayesian approach allows prior knowledge about each annotator’s performance to be incorporated, allowing for a weighting beyond simple majority voting

Performance of annotators assumed to be indepedent on instance features, their errors are assumed to be indepedent, and model doesn’t account for varying difficulty of instances

The Weak Supervision Landscape

Framework for categorising weak supervision settings, focussing on the true label space, weak label space, and the weaking process.

True label space - Describes nature of the task, and the number of labels that can be assigned (multi-label)

Weak label space - Capture the flexibility in the annotation process

Weakening process - Describes how true labels are transformed into weak labels

Compare non-aggregate WS settings to aggregate WS settings - much more non-aggregate WS settings

Non aggregate settings:

- Noisy labels

- Partial labels

- Semi-supervised learning

- Positive-Unlabelled (PU learning)

- Multiple annotators

Aggregate settings:

- Multiple instance learning (MIL)

- Learning from label proportions (LLP)

I promised one equation

Mixing matrix - A matrix that models the weakening process, describing the probability of transforming a true label into a weak label

- Can be used to reverse the noise process and make learning unbiased via algorithms such as EM, but can be very expensive

- Can also model annotator’s behaviour

For a binary classification problem, the mixing matrix for the \(j\)-th annotator is:

(\(M^j\)) = \[\begin{bmatrix} \Pr(y^j = 0 \mid y = 0) & \Pr(y^j = 0 \mid y = 1) \\ \Pr(y^j = 1 \mid y = 0) & \Pr(y^j = 1 \mid y = 1) \end{bmatrix}\]

This can be rewritten in terms of the annotator’s specificity (\(\beta^j\)) and sensitivity (\(\alpha^j\)):

(\(M^j\)) = \[\begin{bmatrix} \beta^j & 1 - \alpha^j \\ 1 - \beta^j & \alpha^j \end{bmatrix}\]